Classifying Water and Fire Pokemon

Image classification has always fascinated me and I have always been curious to learn more about the ins and outs of the subject. That being said I was sick and tired of seeing the same cat and dog classification over and over again(although they are adorable). I decided to rekindle a childhood memory and use the sprites of pokemon to try and classify water and fire pokemon due to their distinctive nature.

To begin the project I first needed to curate my data. To do this I found a website that listed all pokemon with their corresponding sprite and type. I then used beautiful soup to scrape the website and store the corresponding sprites with their types encoded into their filename. I then loaded these images into NumPy arrays and used the types encoded into the names of the pokemon to make up the Y vector. In this case, I decided that 1 is fire and 0 is water type.

Model

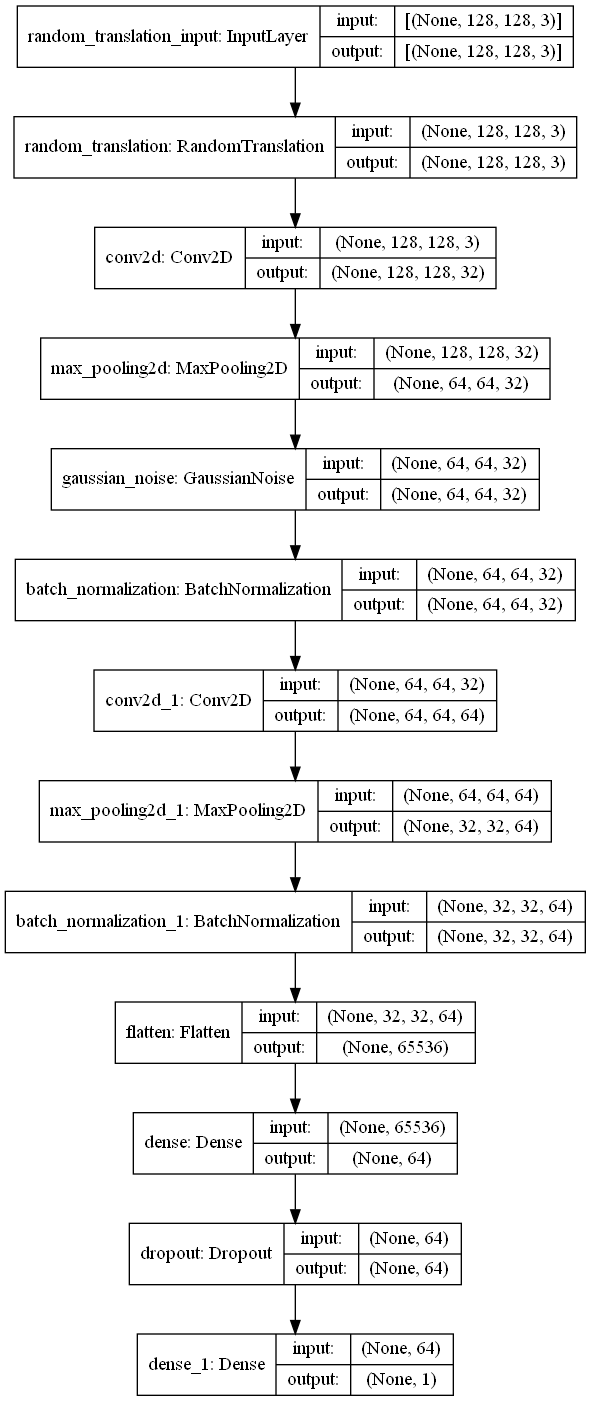

Next, I build a model to try and classify the different pokemon types. To achieve this I used a multilayer convolutional neural network.

My initial model was very simple and only contained 2 conv2d layers with a layer to flatten the shape to pass it to a dense output layer. However, this caused some problems and the model was severely overfitting. To combat this I added batch normalization layers, this helps the data remain regular and allows for easier training and seemed to help with overfitting.

That being said the model was still overfitting, which brings us to the image augmentation section. The model was getting too used to the images being in the center of the screen with no tilt and or noise in them. To combat this, I used a ramp, translation layer to shift the pokemon up/down or left/right by 15%. Additionally, I used a random rotation of up to 45 degrees to rotate the images when they are initially loaded. Finally, I used a gaussian noise layer in the model to add some noise to the batches during training. With this, the model was finally training and didn't seem to overfit anymore.

Results

Above are some examples of how the algorithm seemed to classify the different pokemon. The images with the green captions are the pokemon that were correctly identified as water type whereas the red captions are correctly identified as the fire type. On the other hand, yellow captions are identified as a fire type although they are a water type and vice versa for the purple captions. There are some interesting interpretations that we can draw from these results. The first thing that seems to stand out is that color is one of the major factors in categorizing the data. We can see that almost all pokemon that are predominantly blue were correctly or incorrectly classified as water types. Litwick is an example where this generalization didn't work as it is a fire type. On the other hand Octillery is predominantly red which the algorithm also ended up identifying wrongly as a fire type. One of the more perplexing false predictions made is Samurott as he was identified to be a fire type by the algorithm. Although it is difficult to say, the more jagged edges could be one of several contributing factors that led to this prediction.

Whats Next?

This analysis was an interesting insight into image classification for a binary classification. Some next steps that I would be interested in taking with this project woulc be to use more types of the pokemon instead of constricting it to only 2. This is as there are 18 types of pokemon and any given pokemon can have up to 2 types. My initial starting point for this project was actually to use all categories but ended I ended up finding a very low probability rating and opted to build up from a simpler binary classifier.

Feel free to check out the code by clicking on the link bellow: